3 Practice

3.1 Introduction to forecasting practice91

The purpose of forecasting is to improve decision making in the face of uncertainty. To achieve this, forecasts should provide an unbiased guess at what is most likely to happen (the point forecast), along with a measure of uncertainty, such as a prediction interval (PI). Such information will facilitate appropriate decisions and actions.

Forecasting should be an objective, dispassionate exercise, one that is built upon facts, sound reasoning, and sound methods. But since forecasts are created in social settings, they are influenced by organisational politics and personal agendas. As a consequence, forecasts will often reflect aspirations rather than unbiased projections.

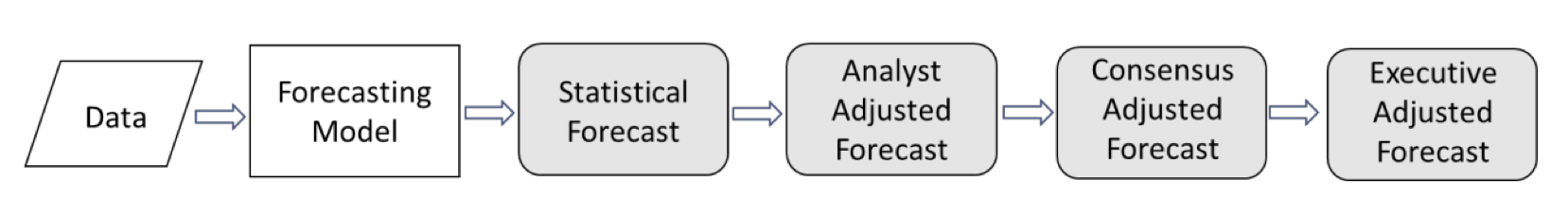

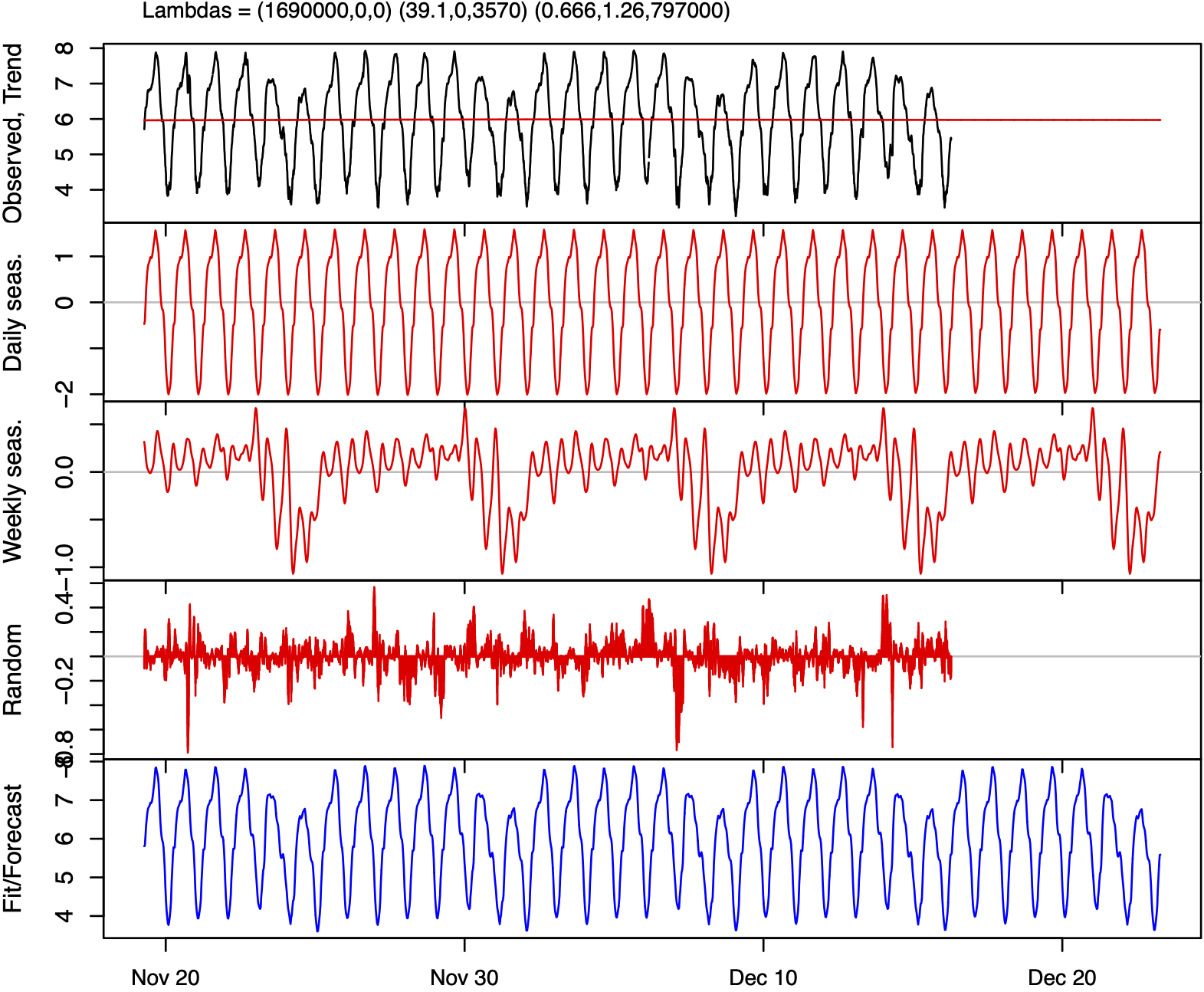

In organisations, forecasts are created through processes that can involve multiple steps and participants. The process can be as simple as executive fiat (also known as evangelical forecasting), unencumbered by what the data show. More commonly, the process begins with a statistical forecast (generated by forecasting software), which is then subject to review and adjustment, as illustrated in Figure 5.

Figure 5: Multi-stage forecasting process.

In concept, such an elaborate multi-stage process allows “management intelligence” to improve forecast quality, incorporating information not accounted for in the statistical model. In reality, however, benefits are not assured. Lawrence, Goodwin, O’Connor, & Önkal (2006) reviewed more than 200 studies, concluding that human judgment can be of significant benefit but is also subject to significant biases. Among the many papers on this subject, there is general agreement on the need to track and review overrides, and the need to better understand the psychological issues around judgmental adjustments.

The underlying problem is that each human touch point subjects the forecast to the interests of the reviewers – and these interests may not align with creating an accurate, unbiased forecast. To identify where such problems are occurring, Forecast Value Added (FVA) analysis is an increasingly popular approach among practitioners.

FVA is defined as the change in a forecasting performance metric that can be attributed to a particular step or participant in the forecasting process (Gilliland, 2002). Any activity that fails to deliver positive FVA (i.e., fails to improve forecast quality) is considered process waste.

Starting with a naive forecast, FVA analysis seeks to determine whether each subsequent step in the process improves upon the prior steps. The “stairstep report” of Table 1 is a familiar way of summarising results, as in this example from Newell Rubbermaid (Schubert & Rickard, 2011).

| Process Step | Forecast accuracy (100%\(-\)MAPE) | FVA vs. Naive | FVA vs. Statistical |

| Naive forecast | 60% | ||

| Statistical forecast | 65% | 5% | |

| Adjusted forecast | 62% | 2% | -3% |

Here, averaged across all products, naive (random walk) achieved forecast accuracy of 60%. The company’s statistical forecast delivered five percentage points of improvement, but management review and adjustment delivered negative value. Such findings – not uncommon – urge further investigation into causes and possible process corrections (such as training reviewers or limiting adjustments). Alternatively, the management review step could be eliminated, providing the dual benefits of freeing up management time spent on forecasting and, on average, more accurate forecasts.

Morlidge (2014c) expanded upon FVA analysis to present a strategy for prioritising judgmental adjustments, finding the greatest opportunity for error reduction in products with high volume and high relative absolute error. Chase (2021) described a machine learning (ML) method to guide forecast review, identifying which forecasts are most likely to benefit from adjustment along with a suggested adjustment range. Baker (2021) used ML classification models to identify characteristics of non-value adding overrides, proposing the behavioural economics notion of a “nudge” to prompt desired forecaster behaviour. Further, Goodwin, Petropoulos, & Hyndman (2017) derived upper bounds for FVA relative to naive forecasts. And Kok (2017) created a Stochastic Value Added (SVA) metric to assess the difference between actual and forecasted distributions, knowledge of which is valuable for inventory management.

Including an indication of uncertainty around the point forecast remains an uncommon practice. Prediction intervals in software generally underestimate uncertainty, often dramatically, leading to unrealistic confidence in the forecast. And even when provided, PIs largely go unused by practitioners. Goodwin (2014) summarised the psychological issues, noting that the generally poor calibration of the PIs may not explain the reluctance to utilise them. Rather, “an interval forecast may accurately reflect the uncertainty, but it is likely to be spurned by decision makers if it is too wide and judged to be uninformative” (Goodwin, 2014, p. 5).

It has long been recognised (Chatfield, 1986; Lawrence, 2000) that the practice of forecasting falls well short of the potential exhibited in academic research, and revealed by the M forecasting competitions. In the M4, a simple benchmark combination method (the average of Single, Holt, and Damped exponential smoothing) reduced the overall weighted average (OWA) error by 17.9% compared to naive. The top six performing methods in M4 further reduced OWA by over 5% compared to the combination benchmark (Makridakis et al., 2020b). But in forecasting practice, just bettering the accuracy of naive has proven to be a surprising challenge. Morlidge (2014b)’s study of eight consumer and industrial businesses found 52% of their forecasts failed to do so. And, as shown, Newel Rubbermaid beat naive by just two percentage points after management adjustments.

Ultimately, forecast accuracy is limited by the nature of the behaviour being forecast. But even a highly accurate forecast is of little consequence if overridden by management and not used to enhance decision making and improve organisational performance.

Practitioners need to recognise limits to forecastability and be willing to consider alternative (non-forecasting) approaches when the desired level of accuracy is not achievable (Gilliland, 2010). Alternatives include supply chain re-engineering – to better react to unforeseen variations in demand, and demand smoothing – leveraging pricing and promotional practices to shape more favourable demand patterns.

Despite measurable advances in our statistical forecasting capabilities (Spyros Makridakis et al., 2020a), it is questionable whether forecasting practice has similarly progressed. The solution, perhaps, is what Morlidge (2014a) (page 39) suggests that “users should focus less on trying to optimise their forecasting process than on detecting where their process is severely suboptimal and taking measures to redress the problem”. This is where FVA can help.

For now, the challenge for researchers remains: To prompt practitioners to adopt sound methods based on the objective assessment of available information, and avoid the “worst practices” that squander resources and fail to improve the forecast.

3.2 Operations and supply chain management

3.2.1 Demand management92

Demand management is one of the dominant components of supply chain management (Fildes, Goodwin, & Lawrence, 2006). Accurate demand estimate of the present and future is a first vital step for almost all aspects of supply chain optimisation, such as inventory management, vehicle scheduling, workforce planning, and distribution and marketing strategies (Kolassa & Siemsen, 2016). Simply speaking, better demand forecasts can yield significantly better supply chain management, including improved inventory management and increased service levels. Classic demand forecasts mainly rely on qualitative techniques, based on expert judgment and past experience (e.g., Weaver, 1971), and quantitative techniques, based on statistical and machine learning modelling (e.g., James W Taylor, 2003b; Bacha & Meyer, 1992). A combination of qualitative and quantitative methods is also popular and proven to be beneficial in practice by, e.g., judgmental adjustments (Önkal & Gönül, 2005; Aris A Syntetos et al., 2016b and §2.11.2; Turner, 1990), judgmental forecast model selection (Han et al., 2019 and §2.11.3; Petropoulos et al., 2018b), and other advanced forecasting support systems (Arvan, Fahimnia, Reisi, & Siemsen, 2019 see also §3.7.1; Baecke, De Baets, & Vanderheyden, 2017).

The key challenges that demand forecasting faces vary from domain to domain. They include:

The existence of intermittent demands, e.g., irregular demand patterns of fashion products. According to Nikolopoulos (2020), limited literature has focused on intermittent demand. The seminal work by Croston (1972) was followed by other representative methods such as the SBA method by Syntetos & Boylan (2001), the aggregate–disaggregate intermittent demand approach (ADIDA) by Nikolopoulos et al. (2011), the multiple temporal aggregation by Petropoulos & Kourentzes (2015), and the \(k\) nearest neighbour (\(k\)NN) based approach by Nikolopoulos et al. (2016). See §2.8 for more details on intermittent demand forecasting and §2.10.2 for a discussion on temporal aggregation.

The emergence of new products. Recent studies on new product demand forecasting are based on finding analogies (Hu, Acimovic, Erize, Thomas, & Van Mieghem, 2019; Wright & Stern, 2015), leveraging comparable products (Baardman, Levin, Perakis, & Singhvi, 2018), and using external information like web search trends (Kulkarni, Kannan, & Moe, 2012). See §3.2.6 for more details on new product demand forecasting.

The existence of short-life-cycle products, e.g., smartphone demand (e.g., Szozda, 2010; Chung, Niu, & Sriskandarajah, 2012; Shi et al., 2020).

The hierarchical structure of the data such as the electricity demand mapped to a geographical hierarchy (e.g., Athanasopoulos et al., 2009; Hong et al., 2019 but also §2.10.1; Hyndman et al., 2011).

With the advent of the big data era, a couple of coexisting new challenges have drawn the attention of researchers and practitioners in the forecasting community: the need to forecast a large volume of related time series (e.g., thousands or millions of products from one large retailer: Salinas et al., 2019a), and the increasing number of external variables that have significant influence on future demand (e.g., massive amounts of keyword search indices that could impact future tourism demand (Law, Li, Fong, & Han, 2019)). Recently, to deal with these new challenges, numerous empirical studies have identified the potentials of deep learning based global models, in both point and probabilistic demand forecasting (e.g., Wen et al., 2017; Bandara et al., 2020b; Rangapuram et al., 2018; Salinas et al., 2019a). With the merits of cross-learning, global models have been shown to be able to learn long memory patterns and related effects (Montero-Manso & Hyndman, 2020), latent correlation across multiple series (Smyl, 2020), handle complex real-world forecasting situations such as data sparsity and cold-starts (Chen, Kang, Chen, & Wang, 2020), include exogenous covariates such as promotional information and keyword search indices (Law et al., 2019), and allow for different choices of distributional assumptions (Salinas et al., 2019a).

3.2.2 Forecasting in the supply chain93

A supply chain is ‘a network of stakeholders (e.g., retailers, manufacturers, suppliers) who collaborate to satisfy customer demand’ (Perera et al., 2019). Forecasts inform many supply chain decisions, including those relating to inventory control, production planning, cash flow management, logistics and human resources (also see §3.2.1). Typically, forecasts are based on an amalgam of statistical methods and management judgment (Fildes & Goodwin, 2007). Hofmann & Rutschmann (2018) have investigated the potential for using big data analytics in supply chain forecasting but indicate more research is needed to establish its usefulness.

In many organisations forecasts are a crucial element of Sales and Operations Planning (S&OP), a tool that brings together different business plans, such as those relating to sales, marketing, manufacturing and finance, into one integrated set of plans (Thomé, Scavarda, Fernandez, & Scavarda, 2012). The purposes of S&OP are to balance supply and demand and to link an organisation’s operational and strategic plans. This requires collaboration between individuals and functional areas at different levels because it involves data sharing and achieving a consensus on forecasts and common objectives (Mello, 2010). Successful implementations of S&OP are therefore associated with forecasts that are both aligned with an organisation’s needs and able to draw on information from across the organisation. This can be contrasted with the ‘silo culture’ identified in a survey of companies by Moon, Mentzer, & Smith (2003) where separate forecasts were prepared by different departments in ‘islands of analysis’. Methods for reconciling forecasts at different levels in both cross-sectional hierarchies (e.g., national, regional and local forecasts) and temporal hierarchies (e.g., annual, monthly and daily forecasts) are also emerging as an approach to break through information silos in organisations (see §2.10.1, §2.10.2, and §2.10.3). Cross-temporal reconciliation provides a data-driven approach that allows information to be drawn from different sources and levels of the hierarchy and enables this to be blended into coherent forecasts (Kourentzes & Athanasopoulos, 2019).

In some supply chains, companies have agreed to share data and jointly manage planning processes in an initiative known as Collaborative Planning, Forecasting, and Replenishment (CPFR) (Seifert, 2003 also see §3.2.3). CPFR involves pooling information on inventory levels and on forthcoming events, like sales promotions. Demand forecasts can be shared, in real time via the Internet, and discrepancies between them reconciled. In theory, information sharing should reduce forecast errors. This should mitigate the ‘bullwhip effect’ where forecast errors at the retail-end of supply chains cause upstream suppliers to experience increasingly volatile demand, forcing them to hold high safety stock levels (Lee et al., 2007). Much research demonstrating the benefits of collaboration has involved simulated supply chains (Fildes, 2017). Studies of real companies have also found improved performance through collaboration (e.g., Boone & Ganeshan, 2008; Eksoz, Mansouri, Bourlakis, & Önkal, 2019; Hill, Zhang, & Miller, 2018), but case study evidence is still scarce (Aris A Syntetos et al., 2016a). The implementation of collaborative schemes has been slow with many not progressing beyond the pilot stage (Galbreth, Kurtuluş, & Shor, 2015; Panahifar, Byrne, & Heavey, 2015). Barriers to successful implementation include a lack of trust between organisations, reward systems that foster a silo mentality, fragmented forecasting systems within companies, incompatible systems, a lack of relevant training and the absence of top management support (Fliedner, 2003; Thomé, Hollmann, & Scavarda do Carmo, 2014).

Initiatives to improve supply chain forecasting can be undermined by political manipulation of forecasts and gaming. Examples include ‘enforcing’: requiring inflated forecasts to align them with sales or financial goals, ‘sandbagging’: underestimating sales so staff are rewarded for exceeding forecasts, and ‘spinning’: manipulating forecasts to garner favourable reactions from colleagues (Mello, 2009). Pennings, Dalen, & Rook (2019) discuss schemes for correcting such intentional biases.

For a discussion of the forecasting of returned items in supply chains, see §3.2.9, while §3.9 offers a discussion of possible future developments in supply chain forecasting.

3.2.3 Forecasting for inventories94

Three aspects of the interaction between forecasting and inventory management have been studied in some depth and are the subject of this review: the bullwhip effect, forecast aggregation, and performance measurement.

The ‘bullwhip effect’ occurs whenever there is amplification of demand variability through the supply chain (Lee, Padmanabhan, & Whang, 2004), leading to excess inventories. This can be addressed by supply chain members sharing downstream demand information, at stock keeping unit level, to take advantage of less noisy data. Analytical results on the translation of ARIMA (see §2.3.4) demand processes have been established for order-up-to inventory systems (Gilbert, 2005). There would be no value in information sharing if the wholesaler can use such relationships to deduce the retailer’s demand process from their orders (see, for example, Graves, 1999). Such deductions assume that the retailer’s demand process and demand parameters are common knowledge to supply chain members. Ali & Boylan (2011) showed that, if such common knowledge is lacking, there is value in sharing the demand data itself and Ali, Boylan, & Syntetos (2012) established relationships between accuracy gains and inventory savings. Analytical research has tended to assume that demand parameters are known. Pastore, Alfieri, Zotteri, & Boylan (2020) investigated the impact of demand parameter uncertainty, showing how it exacerbates the bullwhip effect.

Forecasting approaches have been developed that are particularly suitable in an inventory context, even if not originally proposed to support inventory decisions. For example, Nikolopoulos et al. (2011) proposed that forecasts could be improved by aggregating higher frequency data into lower frequency data (see also §2.10.2; other approaches are reviewed in §3.2.1). Following this approach, Forecasts are generated at the lower frequency level and then disaggregated, if required, to the higher frequency level. For inventory replenishment decisions, the level of aggregation may conveniently be chosen to be the lead time, thereby taking advantage of the greater stability of data at the lower frequency level, with no need for disaggregation.

The variance of forecast errors over lead time is required to determine safety stock requirements for continuous review systems. The conventional approach is to take the variance of one-step-ahead errors and multiply it by the lead time. However, this estimator is unsound, even if demand is independent and identically distributed, as explained by Prak, Teunter, & Syntetos (2017). A more direct approach is to smooth the mean square errors over the lead time (Syntetos & Boylan, 2006).

Strijbosch & Moors (2005) showed that unbiased forecasts will not necessarily lead to achievement, on average, of target cycle service levels or fill rates. Wallström & Segerstedt (2010) proposed a ‘Periods in Stock’ measure, which may be interpreted, based on a ‘fictitious stock’, as the number of periods a unit of the forecasted item has been in stock or out of stock. Such measures may be complemented by a detailed examination of error-implication metrics (Boylan & Syntetos, 2006). For inventory management, these metrics will typically include inventory holdings and service level implications (e.g., cycle service level, fill rate). Comparisons may be based on total costs or via ‘exchange curves’, showing the trade-offs between service and inventory holding costs. Comparisons such as these are now regarded as standard in the literature on forecasting for inventories and align well with practice in industry.

3.2.4 Forecasting in retail95

Retail companies depend crucially on accurate demand forecasting to manage their supply chain and make decisions concerning planning, marketing, purchasing, distribution and labour force. Inaccurate forecasts lead to unnecessary costs and poor customer satisfaction. Inventories should be neither too high (to avoid waste and extra costs of storage and labour force), nor too low (to prevent stock-outs and lost sales (S. Ma & Fildes, 2017)). A comprehensive review of forecasting in retail was given by R. Fildes et al. (2019b), with an appendix covering the COVID pandemic in Fildes, Kolassa, & Ma (2021).

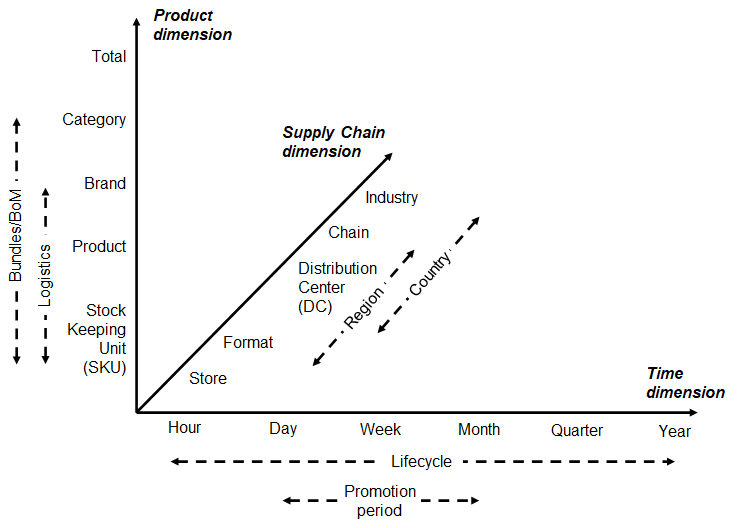

Figure 6: Retail forecasting hierarchy.

Forecasting retail demand happens in a three-dimensional space (Syntetos et al., 2016a): the position in the supply chain hierarchy (store, distribution centre, or chain), the level in the product hierarchy (SKU, brand, category, or total) and the time granularity (day, week, month, quarter, or year). In general, the higher is the position in the supply chain, the lower is the time granularity required, e.g., retailers need daily forecasts for store replenishment and weekly forecasts for DC distribution/logistics activities at the SKU level (Fildes et al., 2019b). Hierarchical forecasting (see §2.10.1) is a promising tool to generate coherent demand forecasts on multiple levels over different dimensions (Oliveira & Ramos, 2019).

Several factors affect retail sales, which often increase substantially during holidays, festivals, and other special events. Price reductions and promotions on own and competitors’ products, as well as weather conditions or pandemics, can also change sales considerably (Huang, Fildes, & Soopramanien, 2019). These factors can coincide to increase complexity yet more, like the weather in the days before public holidays having a major impact on customer demand (Obermair, Holzapfel, & Kuhn, 2023).

Zero sales due to stock-outs or low demand occur very often at the SKU \(\times\) store level, both at weekly and daily granularity. The most appropriate forecasting approaches for intermittent demand are Croston’s method (Croston, 1972), the Syntetos-Boylan approximation (SBA; Aris A Syntetos & Boylan, 2005), and the TSB method (Teunter, Syntetos, & Zied Babai, 2011), all introduced in §2.8.1. These methods were originally proposed to forecast sales of spare parts in automotive and aerospace industries. Intermittent demand forecasting methods are starting to be applied to retail demand (Kolassa, 2016), and the recent M5 competition (Makridakis et al., 2022a, 2022b) will undoubtedly spur further research in this direction.

Univariate forecasting models are the most basic methods retailers may use to forecast demand. They range from simple methods such as simple moving averages or exponential smoothing to ARIMA and ETS models (discussed in §2.3). These are particularly appropriate to forecast demand at higher aggregation levels (Ramos & Oliveira, 2016; Ramos, Santos, & Rebelo, 2015). The main advantage of linear causal methods such as multiple linear regression is to allow the inclusion of external effects discussed above. Simple methods were for a long time found to be superior to more complex and Machine Learning methods (Fildes et al., 2019b), until the M5 competition (Makridakis et al., 2022a, 2022b) showed that ML methods, in particular Gradient Boosting, could indeed outperform them. However, simple methods making due allowance for demand drivers as listed above still have the advantage of being easier to interpret and simpler to care for, so they will continue to have a place in retail forecasting.

To be effective, point estimates should be combined with quantile predictions or prediction intervals for determining safety stock amounts needed for replenishment. However, to the best of our knowledge this is an under-investigated aspect of retail forecasting (Kolassa, 2016; Taylor, 2007), although recent research is starting to address this issue (Hoeltgebaum, Borenstein, Fernandes, & Veiga, 2021).

The online channel accounts for an ever-increasing proportion of retail sales and poses unique challenges to forecasting, beyond the characteristics of brick and mortar (B&M) retail stores. First, there are multiple drivers or predictors of demand that could be leveraged in online retail, but not in B&M:

Online retailers can fine-tune customer interactions, e.g., through the landing page, product recommendations, or personalised promotions, leveraging the customer’s purchasing, browsing or returns history, current shopping cart contents, or the retailer’s stock position, in order to tailor the message to one specific customer in a way that is impossible in B&M.

Conversely, product reviews are a type of interaction between the customer and the retailer and other customers which drives future demand.

Next, there are differences in forecast use:

Forecast use strongly depends on the retailer’s omnichannel strategy (Armstrong, 2017; Melacini, Perotti, Rasini, & Tappia, 2018; Sopadjieva, Dholakia, & Benjamin, 2017): e.g., for “order online, pick up in store” or “ship from store” fulfillment, we need separate but related forecasts for both total online demand and for the demand fulfilled at each separate store.

Online retailers, especially in fashion, have a much bigger problem with product returns. They may need to forecast how many products are returned overall (e.g., G. Shang et al., 2020), or whether a specific customer will return a specific product.

Finally, there are differences in the forecasting process:

B&M retailers decouple pricing/promotion decisions and optimisation from the customer interaction, and therefore from forecasting. Online, this is not possible, because the customer has total transparency to competitors’ offerings. Thus, online pricing needs to react much more quickly to competitive pressures – faster than the forecasting cycle.

Thus, the specific value of predictors is often not known at the time of forecasting: we don’t know yet which customer will log on, so we don’t know yet how many people will see a particular product displayed on their personalised landing page. (Nor do we know today what remaining stock will be displayed.) Thus, changes in drivers need to be “baked into” the forecasting algorithm.

Feedback loops between forecasting and other processes are thus even more important online: yesterday’s forecasts drive today’s stock position, driving today’s personalised recommendations, driving demand, driving today’s forecasts for tomorrow. Overall, online retail forecasting needs to be more agile and responsive to the latest interactional decisions taken in the web store, and more tightly integrated into the retailer’s interactional tactics and omnichannel strategy.

Systematic research on demand forecasting in an online or omnnichannel context is only starting to appear (e.g. Omar, Klibi, Babai, & Ducq, 2021 who use basket data from online sales to improve omnichannel retail forecasts).

3.2.5 Promotional forecasting96

Promotional forecasting is central for retailing (see §3.2.4), but also relevant for many manufacturers, particularly of Fast Moving Consumer Goods (FMCG). In principle, the objective is to forecast sales, as in most business forecasting cases. However, what sets promotional forecasting apart is that we also make use of information about promotional plans, pricing, and sales of complementary and substitute products (Bandyopadhyay, 2009; J.-L. Zhang et al., 2008). Other relevant variables may include store location and format, variables that capture the presentation and location of a product in a store, proxies that characterise the competition, and so on (Andrews, Currim, Leeflang, & Lim, 2008; Van Heerde, Leeflang, & Wittink, 2002).

Three modelling considerations guide us in the choice of models. First, promotional (and associated effects) are proportional. For instance, we do not want to model the increase in sales as an absolute number of units, but instead, as a percentage uplift. We do this to not only make the model applicable to both smaller and larger applications, for example, small and large stores in a retailing chain, but also to gain a clearer insight into the behaviour of our customers. Second, it is common that there are synergy effects. For example, a promotion for a product may be offset by promotions for substitute products. Both these considerations are easily resolved if we use multiplicative regression models. However, instead of working with the multiplicative models, we rely on the logarithmic transformation of the data (see §2.2.1) and proceed to construct the promotional model using the less cumbersome additive formulation (see §2.3.2). Third, the objective of promotional models does not end with providing accurate predictions. We are also interested in the effect of the various predictors: their elasticity. This can in turn provide the users with valuable information about the customers, but also be an input for constructing optimal promotional and pricing strategies (Zhang et al., 2008).

Promotional models have been widely used on brand-level data (for example, Divakar, Ratchford, & Shankar, 2005). However, they are increasingly used on Stock Keeping Unit (SKU) level data (Ma, Fildes, & Huang, 2016; Trapero, Kourentzes, & Fildes, 2015), given advances in modelling techniques. Especially at that level, limited sales history and potentially non-existing examples of past promotions can be a challenge. Trapero et al. (2015) consider this problem and propose using a promotional model that has two parts that are jointly estimated. The first part focuses on the time series dynamics and is modelled locally for each SKU. The second part tackles the promotional part, which pools examples of promotions across SKUs to enable providing reasonable estimates of uplifts even for new SKUs. To ensure the expected heterogeneity in the promotional effects, the model is provided with product group information. Another recent innovation is looking at modelling promotional effects both at the aggregate brand or total sales level, and disaggregate SKU level, relying on temporal aggregation (Kourentzes & Petropoulos, 2016 and §2.10.2). Ma et al. (2016) concern themselves with the intra-and inter-category promotional information. The challenge now is the number of variables to be considered for the promotional model, which they address by using sequential LASSO (see also §2.5.3). Although the aforementioned models have shown very promising results, one has to recognise that in practice promotions are often forecasted using judgmental adjustments, with inconsistent performance (Trapero, Pedregal, Fildes, & Kourentzes, 2013); see also §2.11.2 and §3.7.3.

3.2.6 New product forecasting97

Forecasting the demand for a new product accurately has even more consequence with regards to well-being of the companies than that for a product already in the market. However, this task is one of the most difficult tasks managers must deal with simply because of non-availability of past data (Wind, 1981). Much work has been going on for the last five decades in this field. Despite his Herculean attempt to collate the methods reported, Assmus (1984) could not list all even at that time. The methods used before and since could be categorised into three broad approaches (Goodwin et al., 2013a) namely management judgment, consumer judgment and diffusion/formal mathematical models. In general, the hybrid methods combining different approaches have been found to be more useful (Hyndman & Athanasopoulos, 2018; Peres et al., 2010). Most of the attempts in New product Forecasting (NPF) have been about forecasting ‘adoption’ (i.e., enumerating the customers who bought at least one time) rather than ‘sales’, which accounts for repeat purchases also. In general, these attempts dealt with point forecast although there have been some attempts in interval and density forecasting (Meade & Islam, 2001).

Out of the three approaches in NPF, management judgment is the most used approach (Gartner & Thomas, 1993; Kahn, 2002; Lynn, Schnaars, & Skov, 1999) which is reported to have been carried out by either individual managers or group of them. Ozer (2011) and Surowiecki (2005) articulated their contrasting benefits and deficits. The Delphi method (see §2.11.4) has combined the benefits of these two modes of operation (Rowe & Wright, 1999) which has been effective in NPF. Prediction markets in the recent past offered an alternative way to aggregate forecasts from a group of Managers (Meeran, Dyussekeneva, & Goodwin, 2013; Wolfers & Zitzewitz, 2004) and some successful application of prediction markets for NPF have been reported by Plott & Chen (2002) and Karniouchina (2011).

In the second category, customer surveys, among other methods, are used to directly ask the customers the likelihood of them purchasing the product. Such surveys are found to be not very reliable (Morwitz, 1997). An alternative method to avoid implicit bias associated with such surveys in extracting inherent customer preference is conjoint analysis, which makes implicit trade off customers make between features explicit by analysing the customers’ preference for different variants of the product. One analysis technique that attempts to mirror real life experience more is Choice Based Conjoint analysis (CBC) in which customers choose the most preferred product among available choices. Such CBC models used together with the analysis tools such as Logit (McFadden, 1977) have been successful in different NPF applications (Meeran, Jahanbin, Goodwin, & Quariguasi Frota Neto, 2017).

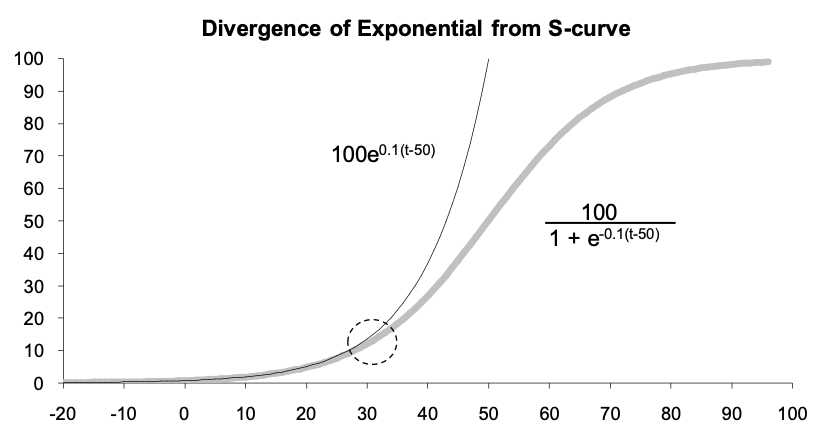

In the third approach, mathematical/formal models known as growth or diffusion curves (see §2.3.18 and §2.3.19) have been used successfully to do NPF (Hu et al., 2019). The non-availability of past data is mitigated by growth curves by capturing the generic pattern of the demand growth of a class of products, which could be defined by a limited number of parameters such as saturation level, inflexion point, etc. For a new product a growth curve can be constituted from well-estimated parameters using analogous products, market intelligence or regression methods. Most extensively used family of growth curves for NPF has started with Bass model (Bass, 1969) that has been extended extensively (Bass, Gordon, Ferguson, & Githens, 2001; Easingwood, Mahajan, & Muller, 1983; Islam & Meade, 2000; Peres et al., 2010; Simon & Sebastian, 1987). A recent applications of NPF focused on consumer electronic goods using analogous products (Goodwin et al., 2013a).

3.2.7 Spare parts forecasting98

Spare parts are ubiquitous in modern societies. Their demand arises whenever a component fails or requires replacement. Demand for spare parts is typically intermittent, which means that it can be forecasted using the plethora of parametric and non-parametric methods presented in §2.8. In addition to the intermittence of demand, spare parts have two additional characteristics that make them different from Work-In-Progress and final products, namely: (i) they are generated by maintenance policies and part breakdowns, and (ii) they are subject to obsolescence (Bacchetti & Saccani, 2012; Kennedy, Wayne Patterson, & Fredendall, 2002).

The majority of forecasting methods do not link the demand to the generating factors, which are often related to maintenance activities. The demand for spare parts originates from the replacement of parts in the installed base of machines (i.e., the location and number of products in use), either preventively or upon breakdown of the part (Kim, Dekker, & Heij, 2017). Fortuin (1984) claims that using installed base information to forecast the spare part demand can lead to stock reductions of up to 25%. An overview of the literature that deals with spare parts forecasting with installed base information is given by Van der Auweraer & Boute (2019). Spare parts demand can be driven by the result of maintenance inspections and, in this case, a maintenance-based forecasting model should then be considered to deal with this issue. Such forecasting models include the Delay Time (DT) model analysed in Wang & Syntetos (2011). Using the fitted values of the distribution parameters of a data set related to a hospital pumps, Wang & Syntetos (2011) have shown that when the failure and fault arriving characteristics of the items can be captured, it is recommended to use the DT model to forecast the spare part demand with a higher forecast accuracy. However, when such information is not available, then time series forecasting methods, such as those presented in §2.8.1, are recommended. The maintenance based forecasting is further discussed in §3.2.8.

Given the life cycle of products, spare parts are associated with a risk of obsolescence. Molenaers, Baets, Pintelon, & Waeyenbergh (2012) discussed a case study where 54% of the parts stocked at a large petrochemical company had seen no demand for the last 5 years. Hinton (1999) reported that the US Department of Defence was holding 60% excess of spare parts, with 18% of the parts (with a total value of $1.5 billion) having no demand at all. To take into account the issue of obsolescence in spare parts demand forecasting, Teunter et al. (2011) have proposed the TSB method, which deals with linearly decreasing demand and sudden obsolescence cases. By means of an empirical investigation based on the individual demand histories of 8000 spare parts SKUs from the automotive industry and the Royal Air Force (RAF, UK), Babai, Syntetos, & Teunter (2014) have demonstrated the high forecast accuracy and inventory performance of the TSB method. Other variants of the Croston’s method developed to deal with the risk of obsolescence in forecasting spare parts demand include the Hyperbolic-Exponential Smoothing method proposed by Prestwich, Tarim, Rossi, & Hnich (2014) and the modified Croston’s method developed by Babai, Dallery, Boubaker, & Kalai (2019).

3.2.8 Predictive maintenance99

A common classification of industrial maintenance includes three types of maintenance (Montero Jimenez, Schwartz, Vingerhoeds, Grabot, & Salaün, 2020). Corrective maintenance refers to maintenance actions that occur after the failure of a component. Preventive maintenance consists of maintenance actions that are triggered after a scheduled number of units as cycles, kilometers, flights, etc. To schedule the fixed time between two preventive maintenance actions, the Weibull distribution is commonly used (Baptista et al., 2018). The drawbacks of preventive maintenance are related to the replacement of components that still have a remaining useful life; therefore, early interventions imply a waste of resources and too late actions could imply catastrophic failures. Additionally, the preventive intervention itself could be a source of failures too. Finally, predictive maintenance (PdM) complements the previous ones and, essentially, uses predictive tools to determine when actions are necessary (Carvalho et al., 2019). Within this predictive maintenance group, other terms are usually found in the literature as Condition-Based Maintenance and Prognostic and Health Management, (Montero Jimenez et al., 2020).

The role of forecasting in industrial maintenance is of paramount importance. One application is to forecast spare parts (see §3.2.7), whose demands are typically intermittent, usually required to carry out corrective and preventive maintenances (Van der Auweraer & Boute, 2019; Wang & Syntetos, 2011). On the other hand, it is crucial for PdM the forecast of the remaining useful time, which is the useful life left on an asset at a particular time of operation (Si, Wang, Hu, & Zhou, 2011). This work will be focused on the latter, which is usually found under the prognostic stage (Jardine, Lin, & Banjevic, 2006).

The typology of forecasting techniques employed is very ample. Montero Jimenez et al. (2020) classify them in three groups: physics-based models, knowledge-based models, and data-driven models. Physics-based models require high skills on the underlying physics of the application. Knowledge-based models are based on facts or cases collected over the years of operation and maintenance. Although, they are useful for diagnostics and provide explicative results, its performance on prognostics is more limited. In this sense, data-driven models are gaining popularity for the development of computational power, data acquisition, and big data platforms. In this case, data coming from vibration analysis, lubricant analysis, thermography, ultrasound, etc. are usually employed. Here, well-known forecasting models as VARIMAX/GARCH (see also §2.3) are successfully used (Baptista et al., 2018; Cheng, Yu, & Chen, 2012; Garcı́a, Pedregal, & Roberts, 2010; Gomez Munoz, De la Hermosa Gonzalez-Carrato, Trapero Arenas, & Garcia Marquez, 2014). State Space models based on the Kalman Filter are also employed (Pedregal & Carmen Carnero, 2006; Pedregal, Garcı́a, & Roberts, 2009 and §2.3.6). Recently, given the irruption of the Industry 4.0, physical and digital systems are getting more integrated and Machine Learning/Artificial Intelligence are drawing the attention of practitioners and academics alike (Carvalho et al., 2019). In that same reference, it is found that the most frequently used Machine Learning methods in PdM applications were Random Forest, Artificial Neural Networks, Support Vector Machines and K-means.

3.2.9 Reverse logistics100

As logistics and supply chain operations rely upon accurate demand forecasts (see also §3.2.2), reverse logistics and closed loop supply chain operations rely upon accurate forecasts of returned items. Such items (usually referred as cores) can be anything from reusable shipping or product containers to used laptops, mobile phones or car engines. If some (re)manufacturing activity is involved in supply chains, it is both demand and returned items forecasts that are needed since it is net demand requirements (demand – returns) that drive remanufacturing operations.

Forecasting methods that are known to work well when applied to demand forecasting, such as SES for example (see §2.3.1), do not perform well when applied to time-series of returns because they assume returns to be a process independent of sales. There are some cases when this independence might hold, such as when a recycler receives items sold by various companies and supply chains (Goltsos & Syntetos, 2020). In these cases, simple methods like SES applied on the time series of returns might prove sufficient. Typically though, returns are strongly correlated with past sales and the installed base (number of products with customers). After all, there cannot be a product return if a product has not first been sold. This lagged relationship between sales and returns is key to the effective characterisation of the returns process.

Despite the increasing importance of circular economy and research on closed loop supply chains, returns forecasting has not received sufficient attention in the academic literature (notable contributions in this area include Goh & Varaprasad, 1986; Brito & Laan, 2009; Clottey, Benton, & Srivastava, 2012; Toktay, 2003; Toktay, Wein, & Zenios, 2000). The seminal work by Kelle & Silver (1989) offers a useful framework to forecasting that is based on the degree of available information about the relationship between demand and returns. Product level (PL) information consists of the time series of sales and returns, alongside information on the time each product spends with a customer. The question then is how to derive this time to return distribution. This can be done through managerial experience, by investigating the correlation of the demand and the returns time series, or by serialising and tracking a subset (sample) of items. Past sales can then be used in conjunction with this distribution to create forecasts of returns. Serial number level (SL) information, is more detailed and consists of the time matching of an individual unit item’s issues and returns and thus exactly the time each individual unit, on a serial number basis, spent with the customer. Serialisation allows for a complete characterisation of the time to return distribution. Very importantly, it also enables tracking exactly how many items previously sold remain with customers, providing time series of unreturned past sales. Unreturned past sales can then be extrapolated — along with a time to return distribution — to create forecasts of returns.

Goltsos, Syntetos, & Laan (2019) offered empirical evidence in the area of returns forecasting by analysing a serialised data set from a remanufacturing company in North Wales. They found the Beta probability distribution to best fit times-to-return. Their research suggests that serialisation is something worthwhile pursuing for low volume products, especially if they are expensive. This makes a lot of sense from an investment perspective, since the relevant serial numbers are very few. However, they also provided evidence that such benefits expand in the case of high volume items. Importantly, the benefits of serialisation not only enable the implementation of the more complex SL method, but also the accurate characterisation of the returns process, thus also benefiting the PL method (which has been shown to be very robust).

3.3 Economics and finance

3.3.1 Macroeconomic survey expectations101

Macroeconomic survey expectations allow tests of theories of how agents form their expectations. Expectations play a central role in modern macroeconomic research (Gali, 2008). Survey expectations have been used to test theories of expectations formation for the last 50 years. Initially the Livingston survey data on inflationary expectations was used to test extrapolative or adaptive hypothesis, but the focus soon turned to testing whether expectations are formed rationally (see Turnovsky & Wachter (1972), for an early contribution). According to Muth (1961) p.316, rational expectations is the hypothesis that: ‘expectations, since they are informed predictions of future events, are essentially the same as the predictions of the relevant economic theory.’ This assumes all agents have access to all relevant information. Instead, one can test whether agents make efficient use of the information they possess. This is the notion of forecast efficiency (Mincer & Zarnowitz, 1969), and can be tested by regressing the outturns on a constant and the forecasts of those outturns. Under forecast efficiency, the constant should be zero and the coefficient on the forecasts should be one. When the slope coefficient is not equal to one, the forecast errors will be systematically related to information available at the forecast origin, namely, the forecasts, and cannot be optimal. The exchange between Figlewski & Wachtel (1981, 1983) and Dietrich & Joines (1983) clarifies the role of partial information in testing forecast efficiency (that is, full information is not necessary), and shows that the use of the aggregate or consensus forecast in the individual realisation-forecast regression outlined above will give rise to a slope parameter less than one when forecasters are efficient but possess partial information. Zarnowitz (1985), Keane & Runkle (1990) and Bonham & Cohen (2001) consider pooling across individuals in the realisation-forecast regression, and the role of correlated shocks across individuals.

Recently, researchers considered why forecasters might not possess full-information, stressing informational rigidities: sticky information (see, inter alia, Mankiw & Reis, 2002; Mankiw, Reis, & Wolfers, 2003), and noisy information (see, inter alia, Woodford, 2002; Sims, 2003). Coibion & Gorodnichenko (2012, 2015) test these models using aggregate quantities, such as mean errors and revisions.

Forecaster behaviour can be characterised by the response to new information (see also §2.11.1). Over or under-reaction would constitute inefficiency. Broer & Kohlhas (2018) and Bordalo, Gennaioli, Ma, & Shleifer (2018) find that agents over-react, generating a negative correlation between their forecast revision and error. The forecast is revised by more than is warranted by the new information (over-confidence regarding the value of the new information). Bordalo et al. (2018) explain the over-reaction with a model of ‘diagnostic’ expectations, whereas Fuhrer (2018) finds ‘intrinsic inflation persistence’: individuals under-react to new information, smoothing their responses to news.

The empirical evidence is often equivocal, and might reflect: the vintage of data assumed for the outturns; whether allowance is made for ‘instabilities’ such as alternating over- and under-prediction (Rossi & Sekhposyan, 2016) and the assumption of squared-error loss (see, for example, Patton & Timmermann, 2007; Clements, 2014b).

Research has also focused on the histogram forecasts produced by a number of macro-surveys. Density forecast evaluation techniques such as the probability integral transform102 have been applied to histogram forecasts, and survey histograms have been compared to benchmark forecasts (see, for example, Bao, Lee, & Saltoglu, 2007; Clements, 2018; Hall & Mitchell, 2009). Research has also considered uncertainty measures based on the histograms Clements (2014a). §2.12.4 and §2.12.5 also discuss the evaluation and reliability of probabilistic forecasts.

Engelberg, Manski, & Williams (2009) and Clements (2009, 2010) considered the consistency between the point predictions and histogram forecasts. Reporting practices such as ‘rounding’ have also been considered (Binder, 2017; Clements, 2011; Manski & Molinari, 2010).

Clements (2019) reviews macroeconomic survey expectations.

3.3.2 Forecasting GDP and inflation103

As soon as Bayesian estimation of DSGEs became popular, these models have been employed in forecasting horseraces to predict the key macro variables, for example, Gross Domestic Product (GDP) and inflation, as discussed in Del Negro & Schorfheide (2013). The forecasting performance is evaluated using rolling or recursive (expanded) prediction windows (for a discussion, see Cardani, Paccagnini, & Villa, 2015). DSGEs are usually estimated using revised data, but several studies propose better results estimating the models using real-time data (see, for example, Del Negro & Schorfheide, 2013; Cardani et al., 2019; Kolasa & Rubaszek, 2015b; Wolters, 2015).

The current DSGE model forecasting compares DSGE models to competitors (see §2.3.15 for an introduction to DSGE models). Among them, we can include the Random Walk (the naive model which assumes a stochastic trend), the Bayesian VAR models (Minnesota Prior à la Doan, Litterman, & Sims (1984); and Large Bayesian VAR à la Bańbura, Giannone, & Reichlin (2010)), the Hybrid-Models (the DSGE-VAR à la Del Negro & Schorfheide (2004); and the DSGE-Factor Augmented VAR à la Consolo, Favero, & Paccagnini (2009)), and the institutional forecasts (Greenbook, Survey Professional Forecasts, and the Blue Chip, as illustrated in Edge & Gürkaynak (2010)).

Table 2 summarises the current DSGE forecasting literature mainly for the US and Euro Area and provided by estimating medium-scale models. As general findings, DSGEs can outperform other competitors, with the exception for the Hybrid-Models, in the medium and long-run to forecast GDP and inflation. In particular, Smets & Wouters (2007) was the first empirical evidence of how DSGEs can be competitive with forecasts from Bayesian VARs, convincing researchers and policymakers in adopting DSGEs for prediction evaluations. As discussed in Del Negro & Schorfheide (2013), the accuracy of DSGE forecasts depends on how the model is able to capture low-frequency trends in the data. To explain the macro-finance linkages during the Great Recession, the Smets and Wouters model was also compared to other DSGE specifications including the financial sector. For example, Del Negro & Schorfheide (2013), Kolasa & Rubaszek (2015a), Galvão, Giraitis, Kapetanios, & Petrova (2016), and Cardani et al. (2019) provide forecasting performance for DSGEs with financial frictions. This strand of the literature shows how this feature can improve the baseline Smets and Wouters predictions for the business cycle, in particular during the recent Great Recession.

However, the Hybrid-Models always outperform the DSGEs thanks to the combination of the theory-based model (DSGE) and the statistical representation (VAR or Factor Augmented VAR), as illustrated by Del Negro & Schorfheide (2004) and Consolo et al. (2009).

Moreover, several studies discuss how prediction performance could depend on the parameters’ estimation. Kolasa & Rubaszek (2015b) suggest that updating DSGE model parameters only once a year is enough to have accurate and efficient predictions about the main macro variables.

| Competitor | Reference |

|---|---|

| Hybrid Models | US: (Del Negro & Schorfheide, 2004), (Consolo et al., 2009) |

| Random Walk | US: (Gürkaynak, Kısacıkoğlu, & Rossi, 2013), Euro Area: (Warne et al., 2010), (Smets, Warne, & Wouters, 2014) |

| Bayesian VAR | US: (Smets & Wouters, 2007), (Gürkaynak et al., 2013), (Wolters, 2015), (Bekiros & Paccagnini, 2014), (S. Bekiros & Paccagnini, 2015), (S. D. Bekiros & Paccagnini, 2015), Euro Area: (Warne et al., 2010) |

| Time-Varying VAR and Markov-Switching | US: (S. Bekiros et al., 2016), Euro Area: (S. D. Bekiros & Paccagnini, 2016) |

| Institutional Forecasts | US: (Edge & Gürkaynak, 2010), (Kolasa et al., 2012), (Del Negro & Schorfheide, 2013), (Wolters, 2015) |

3.3.3 Forecasting unemployment104

Unemployment has significant implications at both the micro and macro levels, influencing individual living standards, health and well-being, as well as imposing direct costs on the economy. Given its importance, policy-makers put unemployment at the heart of their economic plans, and as such require accurate forecasts to feed into economic policy decisions. Unemployment is described as a lagging indicator of the economy, with characteristics including business cycles and persistence. Despite this, forecasting the unemployment rate is difficult, because the data are highly non-stationary with abrupt distributional shifts, but persistence within regimes. In this section we focus on methods used to forecast the aggregate unemployment rate.

Unemployment is the outcome of supply and demand for labour, aggregated across all prospective workers, with labour demand derived from demand for goods and services. This implies a highly complex data generating process. Empirical forecasting models tend to simplify this relationship, with two approaches dominating the literature. The first is based on the Phillips (1958) curve capturing a non-linear relationship between nominal wage inflation and the unemployment rate, or the relation between unemployment and output described as Okun’s (1962) Law. The second uses the time-series properties of the data to produce statistical forecasts, such as univariate linear models (for example, ARIMA or unobserved component models; see §2.3.4 and §2.3.6), multivariate linear models (for example, VARMA or CVAR; see §2.3.9), various threshold autoregressive models (see §2.3.13), Markov Switching models (see §2.3.12) and Artificial Neural Networks (see §2.7.8).

The empirical literature is inconclusive as to the ‘best’ forecasting models for unemployment, which varies by country, time period and forecast horizon. There is some evidence that non-linear statistical models tend to outperform within business cycle contractions or expansions, but perform worse across business cycles (see, for example, Montgomery, Zarnowitz, Tsay, & Tiao, 1998; Koop & Potter, 1999; Rothman, 1998), whereas Proietti (2003) finds that linear models characterised by higher persistence perform significantly better. Evidence of non-linearities is found by Peel & Speight (2000), Milas & Rothman (2008) and Johnes (1999), and Gil-Alana (2001) finds evidence of long-memory. Barnichon & Garda (2016) applies a flow approach to unemployment forecasting and finds improvements, as does Smith (2011).

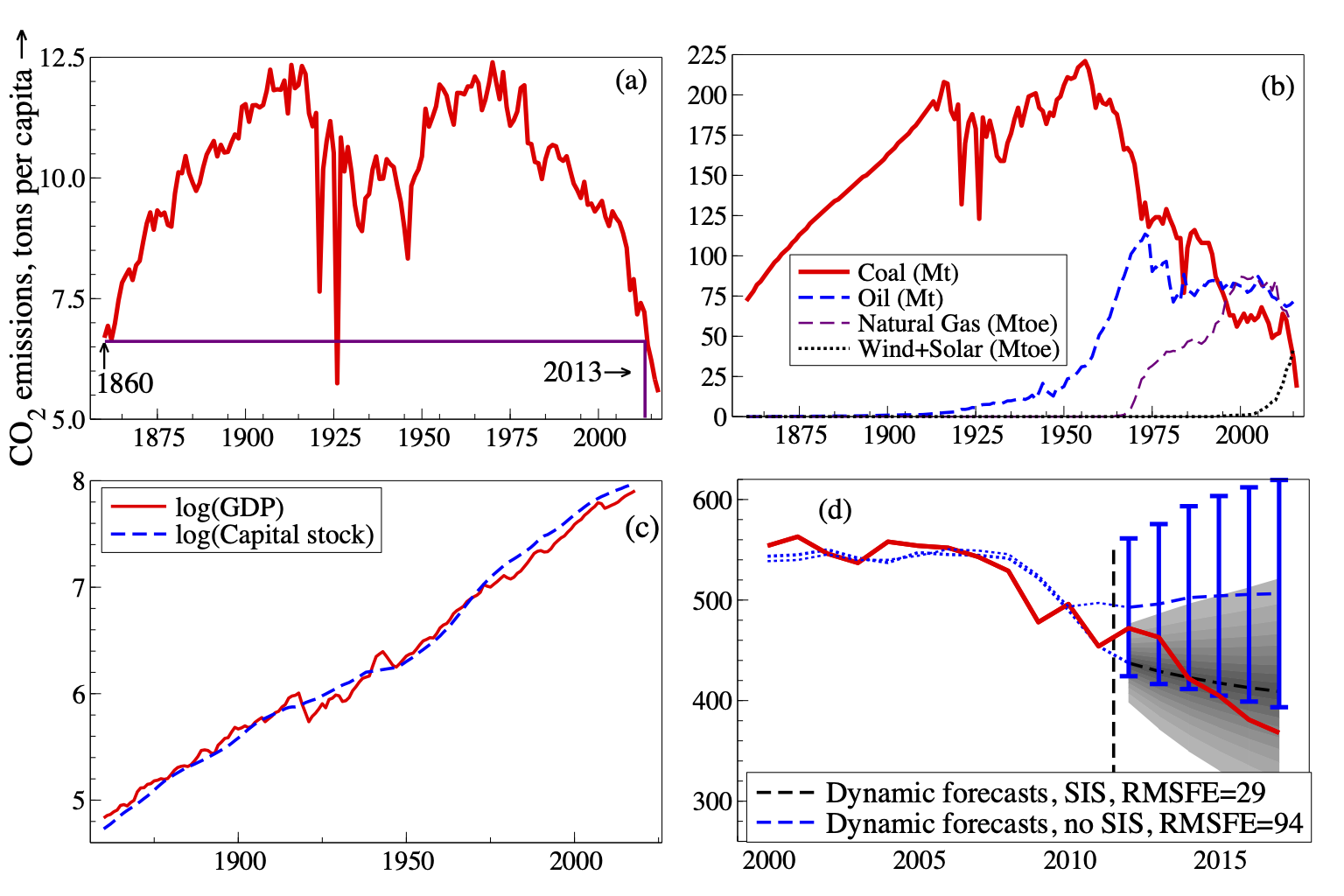

One approach that does yield accurate forecasts is to use a measure of profitability as the explanatory variable, assuming that unemployment will fall when hiring is profitable. D. F. Hendry (2001) proxies profitability (\(\pi\)) by the gap between the real interest rate (reflecting costs) and the real growth rate (reflecting the demand side), such that the unemployment rate rises when the real interest rate exceeds the real growth rate, and vice versa: \[\pi_{t} = \left(R_{L}-\Delta p -\Delta y\right)_{t}\] where \(R_{L}\) is the long-term interest rate, \(\Delta p\) is a measure of inflation and \(\Delta y\) is a measure of output growth. This is then embedded within a dynamic equilibrium correction model, using impulse indicator saturation (IIS: D. F. Hendry et al., 2008b; Johansen & Nielsen, 2009) and step indicator saturation (SIS: Castle et al., 2015a) to capture outliers, breaks and regime shifts, as well as allowing for any non-linearities using Taylor expansions for the regressors. The resulting forecasts perform well over the business cycle relative to alternative statistical models (also see (Hendry, 2015), and (J. L. Castle et al., 2020c)).

Forecasts from models of unemployment could be improved with either better economic theories of aggregate unemployment,105 or more general empirical models that tackle stochastic trends, breaks, dynamics, non-linearities and interdependence,106 or better still, both. The COVID-19 pandemic and subsequent lockdown policies highlight just how important forecasts of unemployment are (Castle, Doornik, & Hendry, 2021).

3.3.4 Forecasting productivity107

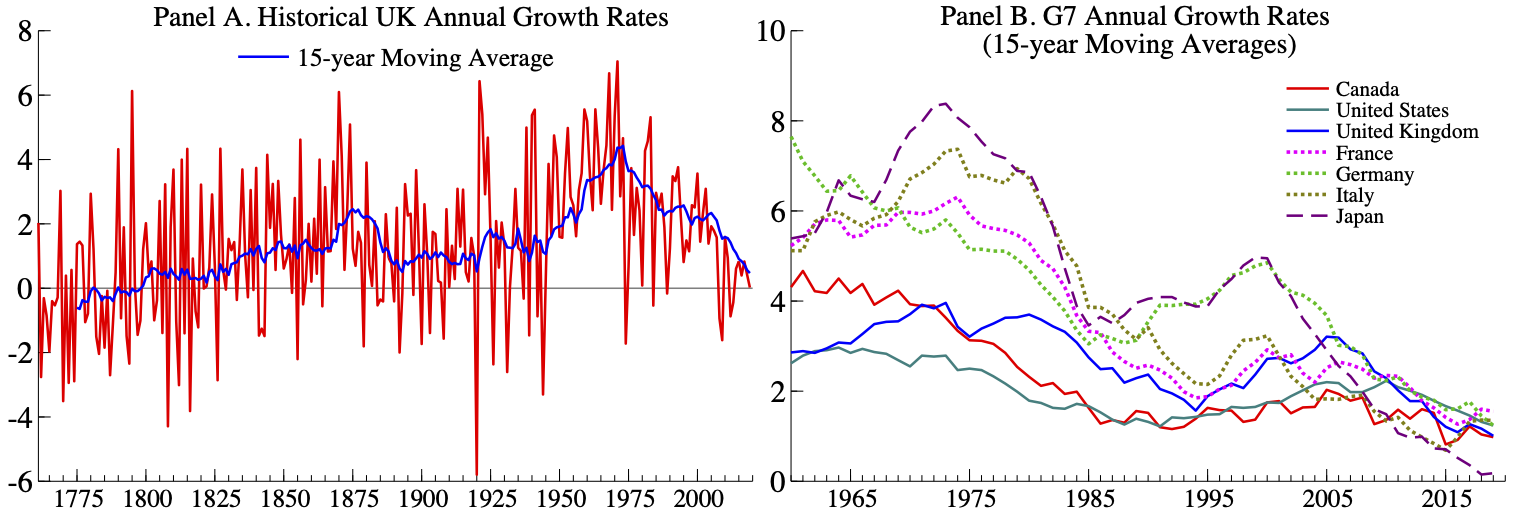

The growth of labour productivity, measured by the percent change in output per hours worked, has varied dramatically over the last 260 years. In the UK it ranged from -5.8% at the onset of the 1920 Depression to just over 7% in 1971; see panel A in Figure 7. Productivity growth is very volatile and has undergone large historical shifts with productivity growth averaging around 1% between 1800-1950 followed by an increase in the average annual growth to 3% between 1950-1975. Since the mid-1970’s productivity growth has gradually declined in many developed economies; see panel B of Figure 7. In the decade since 2009, 2% annual productivity growth was an upper bound for most G7 countries.

Figure 7: Productivity growth (output per total hours worked). Sources: Bank of England and Penn World Table Version 10.0.

The most common approach for forecasting productivity is to estimate the trend growth in productivity using aggregate data. For example, Gordon (2003) considers three separate approaches for calculating trend labor productivity in the United States based on (i) average historical growth rates outside of the business cycle, (ii) filtering the data using the HP filter (Hodrick & Prescott, 1997), and (iii) filtering the data using the Kalman filter (see Kalman, 1960). The Office for Budget Responsibility (OBR) in the UK and the Congressional Budget Office (CBO) in the US follow similar approaches for generating its forecasts of productivity based on average historical growth rates as well as judgments about factors that may cause productivity to deviate from its historical trend in the short-term.108 Alternative approaches include forecasting aggregate productivity using disaggregated firm-level data (see Bartelsman, Kurz, & Wolf, 2011; Bartelsman & Wolf, 2014 and §2.10.1) and using time-series models (see Žmuk, Dumičić, & Palić, 2018 and §2.3.4).

In the last few decades there have been several attempts to test for time-varying trends in productivity and to allow for them. However, the focus of these approaches has been primarily on the United States (Hansen, 2001; Roberts, 2001), which saw a sharp rise in productivity growth in the 1990’s that was not mirrored in other countries (Basu, Fernald, Oulton, & Srinivasan, 2003). Test for shifts in productivity growth rates in other advanced economies did not find evidence of a changes in productivity growth until well after the financial crisis in 2007 (Benati, 2007; Glocker & Wegmüller, 2018; Turner & Boulhol, 2011).

A more recent approach by Martinez et al. (2021) allows for a time-varying long-run trend in UK productivity. They show that are able to broadly replicate the OBR’s forecasts using a quasi-transformed autoregressive model with one lag, a constant, and a trend. The estimated long-run trend is just over 2% per year through 2007 Q4 which is consistent with the OBR’s assumptions about the long-run growth rate of productivity (OBR, 2019). However, it is possible to dramatically improve upon OBR’s forecasts in real-time by allowing for the long-term trend forecast to adjust based on more recent historical patterns. By taking a local average of the last four years of growth rates, Martinez et al. (2021) generate productivity forecasts whose RMSE is on average more than 75% smaller than OBR’s forecasts extending five-years-ahead and is 84% smaller at the longest forecast horizon.

3.3.5 Fiscal forecasting for government budget surveillance109

Recent economic recessions have led to a renewed interest in fiscal forecasting, mainly for deficit and debt surveillance. This was certainly true in the case of the 2008 recession, and looks to become even more important in the current economic crisis brought on by the COVID-19 pandemic. This is particularly important in Europe, where countries are subject to strong fiscal monitoring mechanisms. Two main themes can be detected in the fiscal forecasting literature (Leal, Pérez, Tujula, & Vidal, 2008). First, investigate the properties of forecasts in terms of bias, efficiency and accuracy. Second, check the adequacy of forecasting procedures.

The first topic has its own interest for long, mainly restricted to international institutions (Artis & Marcellino, 2001). Part of the literature, however, argue that fiscal forecasts are politically biased, mainly because there is usually no clear distinction between political targets and rigorous forecasts (Frankel & Schreger, 2013; Strauch, Hallerberg, & Hagen, 2004). In this sense, the availability of forecasts from independent sources is of great value (Jonung & Larch, 2006). But it is not as easy as saying that independent forecasters would improve forecasts due to the absence of political bias, because forecasting accuracy is compromised by complexities of data, country-specific factors, outliers, changes in the definition of fiscal variables, etc. Very often some of these issues are known by the staff of organisations in charge of making the official statistics and forecasts long before the general public, and some information never leaves such institutions. So this insider information is actually a valuable asset to improve forecasting accuracy (Leal et al., 2008).

As for the second issue, namely the accuracy of forecasting methods, the literature can be divided into two parts, one based on macroeconomic models with specific fiscal modules that allows to analyse the effects of fiscal policy on macro variables and vice versa (see Favero & Marcellino (2005) and references therein), and the other based on pure forecasting methods and comparisons among them. This last stream of research basically resembles closely what is seen in other forecasting areas: (i) there is no single method outperforming the rest generally, (ii) judgmental forecasting is especially important due to data problems (see §2.11), and (iii) combination of methods tends to outperform individual ones, see Leal et al. (2008) and §2.6.

Part of the recent literature focused on the generation of very short-term public finance monitoring systems using models that combine annual information with intra-annual fiscal data (Pedregal & Pérez, 2010) by time aggregation techniques (see §2.10.2), often set up in a SS framework (see §2.3.6). The idea is to produce global annual end-of-year forecasts of budgetary variables based on the most frequently available fiscal indicators, so that changes throughout the year in the indicators can be used as early warnings to infer the changes in the annual forecasts and deviations from fiscal targets (Pedregal, Pérez, & Sánchez, 2014).

The level of disaggregation of the indicator variables are established according to the information available and the particular objectives. The simplest options are the accrual National Accounts annual or quarterly fiscal balances running on their cash monthly counterparts. A somewhat more complex version is the previous one with all the variables broken down into revenues and expenditures. Other disaggregation schemes have been applied, namely by region, by administrative level (regional, municipal, social security, etc.), or by items within revenue and/or expenditure (VAT, income taxes, etc. Paredes, Pedregal, & Pérez, 2014; Asimakopoulos, Paredes, & Warmedinger, 2020).

Unfortunately, what is missing is a comprehensive and transparent forecasting system, independent of Member States, capable of producing consistent forecasts over time and across countries. This is certainly a challenge that no one has yet dared to take up.

3.3.6 Interest rate prediction110

The (spot) rate on a (riskless) bond represents the ex-ante return (yield) to maturity which equates its market price to a theoretical valuation. Modelling and predicting default-free, short-term interest rates are crucial tasks in asset pricing and risk management. Indeed, the value of interest rate–sensitive securities depends on the value of the riskless rate. Besides, the short interest rate is a fundamental ingredient in the formulation and transmission of the monetary policy (see, for example, §2.3.15). However, many popular models of the short rate (for instance, continuous time, diffusion models) fail to deliver accurate out-of-sample forecasts. Their poor predictive performance may depend on the fact that the stochastic behaviour of short interest rates may be time-varying (for instance, it may depend on the business cycle and on the stance of monetary policy).

Notably, the presence of nonlinearities in the conditional mean and variance of the short-term yield influences the behaviour of the entire term structure of spot rates implicit in riskless bond prices. For instance, the level of the short-term rate directly affects the slope of the yield curve. More generally, nonlinear rate dynamics imply a nonlinear equilibrium relationship between short and long-term yields. Accordingly, recent research has reported that dynamic econometric models with regime shifts in parameters, such as Markov switching (MS; see §2.3.12) and threshold models (see §2.3.13), are useful at forecasting rates.

The usefulness of MS VAR models with term structure data had been established since Hamilton (1988) and Garcia & Perron (1996): single-state, VARMA models are overwhelmingly rejected in favour of multi-state models. Subsequently, a literature has emerged that has documented that MS models are required to successfully forecast the yield curve. Lanne & Saikkonen (2003) showed that a mixture of autoregressions with two regimes improves the predictions of US T-bill rates. Ang & Bekaert (2002) found support for MS dynamics in the short-term rates for the US, the UK, and Germany. Cai (1994) developed a MS ARCH model to examine volatility persistence, reflecting a concern that it may be inflated by regimes. Gray (1996) generalised this attempt to MS GARCH and reported improvements in pseudo out-of-sample predictions. Further advances in the methods and applications of MS GARCH are in Haas, Mittnik, & Paolella (2004) and Smith (2002). A number of papers have also investigated the presence of regimes in the typical factors (level, slope, and convexity) that characterise the no-arbitrage dynamics of the term structure, showing the predictive benefits of incorporating MS (see, for example, M. Guidolin & Pedio, 2019; Hevia, Gonzalez-Rozada, Sola, & Spagnolo, 2015).

Alternatively, a few studies have tried to capture the time-varying, nonlinear dynamics of interest rates using threshold models. As discussed by Pai & Pedersen (1999), threshold models have an advantage compared to MS ones: the regimes are not determined by an unobserved latent variable, thus fostering interpretability. In most of the applications to interest rates, the regimes are determined by the lagged level of the short rate itself, in a self-exciting fashion. For instance, Pfann, Schotman, & Tschernig (1996) explored nonlinear dynamics of the US short-term interest rate using a (self-exciting) threshold autoregressive model augmented by conditional heteroskedasticity (namely, a TAR-GARCH model) and found strong evidence of the presence of two regimes. More recently, also Gospodinov (2005) used a TAR-GARCH to predict the short-term rate and showed that this model can capture some well-documented features of the data, such as high persistence and conditional heteroskedasticity.

Another advantage of nonlinear models is that they can reproduce the empirical puzzles that plague the expectations hypothesis of interest rates (EH), according to which it is a weighted average of short-term rates to drive longer-term rates (see, for example, Bansal, Tauchen, & Zhou, 2004; Dai, Singleton, & Yang, 2007). For instance, while Bekaert, Hodrick, & Marshall (2001) show single-state VARs cannot generate distributions consistent with the EH, Guidolin & Timmermann (2009) find that the optimal combinations of lagged short and forward rates depend on regimes so that the EH holds only in some states.

As widely documented (see, for instance, M. Guidolin & Thornton, 2018), the predictable component in mean rates is hardly significant. As a result, the random walk remains a hard benchmark to outperform as far as the prediction of the mean is concerned. However, density forecasts reflect all moments and the models that capture the dynamics of higher-order moments tend to perform best. MS models appear at the forefront of a class of non-linear models that produce accurate density predictions (see, for example, Hong, Li, & Zhao, 2004; Maheu & Yang, 2016). Alternatively, Pfann et al. (1996) and more recently Dellaportas, Denison, & Holmes (2007) estimated TAR models to also forecast conditional higher order moments and all report reasonable accuracy.

Finally, a literature has strived to fit rates not only under the physical measure, i.e., in time series, but to predict rates when MS enters the pricing kernel, the fundamental pricing operator. A few papers have assumed that regimes represent a new risk factor (see, for instance, Dai & Singleton, 2003). This literature reports that MS models lead to a range of shapes for nominal and real term structures (see, for instance, Veronesi & Yared, 1999). Often the model specifications that are not rejected by formal tests include regimes (Ang, Bekaert, & Wei, 2008; Bansal & Zhou, 2002).

To conclude, it is worthwhile noting that, while threshold models are more interpretable, MS remain a more popular alternative for the prediction of interest rates. This is mainly due to the fact that statistical inference for threshold regime switching models poses some challenges, because the likelihood function is discontinuous with respect to the threshold parameters.

3.3.7 House price forecasting111

The boom and bust in housing markets in the early and mid 2000s and its decisive role in the Great Recession has generated a vast interest in the dynamics of house prices and emphasised the importance of accurately forecasting property price movements during turbulent times. International organisations, central banks and research institutes have become increasingly engaged in monitoring the property price developments across the world.112 At the same time, a substantial empirical literature has developed that deals with predicting future house price movements (for a comprehensive survey see Ghysels, Plazzi, Valkanov, & Torous, 2013). Although this literature concentrates almost entirely on the US (see, for example, Rapach & Strauss, 2009; Bork & Møller, 2015), there are many other countries, such as the UK, where house price forecastability is of prime importance. Similarly to the US, in the UK, housing activities account for a large fraction of GDP and of households’ expenditures; real estate property comprises a significant component of private wealth and mortgage debt constitutes a main liability of households (Office for National Statistics, 2019).

The appropriate forecasting model has to reflect the dynamics of the specific real estate market and take into account its particular characteristics. In the UK, for instance, there is a substantial empirical literature that documents the existence of strong spatial linkages between regional markets, whereby the house price shocks emanating from southern regions of the country, and in particular Greater London, have a tendency to spread out and affect neighbouring regions with a time lag (see, for example, Cook & Thomas, 2003; Antonakakis, Chatziantoniou, Floros, & Gabauer, 2018 inter alia; Holly, Pesaran, & Yamagata, 2010); see also §2.3.10 on forecasting functional data.

Recent evidence also suggests that the relationship between real estate valuations and conditioning macro and financial variables displayed a complex of time-varying patterns over the previous decades (Aizenman & Jinjarak, 2013). Hence, predictive methods that do not allow for time-variation in both predictors and their marginal effects may not be able to capture the complex house price dynamics in the UK (see Yusupova, Pavlidis, & Pavlidis, 2019 for a comparison of forecasting accuracy of a battery of static and dynamic econometric methods).

An important recent trend is to attempt to incorporate information from novel data sources (such as newspaper articles, social media, etc.) in forecasting models as a measure of expectations and perceptions of economic agents (see also §2.9.3). It has been shown that changes in uncertainty about house prices impact on housing investment and real estate construction decisions (Banks, Blundell, Oldfield, & Smith, 2015; Cunningham, 2006; Oh & Yoon, 2020), and thus incorporating a measure of uncertainty in the forecasting model can improve the forecastability of real estate prices. For instance in the UK, the House Price Uncertainty (HPU) index (Yusupova, Pavlidis, Paya, & Peel, 2020), constructed using the methodology outlined in Baker, Bloom, & Davis (2016),113 was found to be important in predicting property price inflation ahead of the house price collapse of the third quarter of 2008 and during the bust phase (Yusupova et al., 2019). Along with capturing the two recent recessions (in the early 1990s and middle 2000s) this index also reflects the uncertainly related to the EU Referendum, Brexit negotiations and COVID-19 pandemic.

3.3.8 Exchange rate forecasting114

Exchange rates have long fascinated and puzzled researchers in international finance. The reason is that following the seminal paper of Meese & Rogoff (1983), the common wisdom is that macroeconomic models cannot outperform the random walk in exchange rate forecasting (see Rossi, 2013 for a survey). This view is difficult to reconcile with the strong belief that exchange rates are driven by fundamentals, such as relative productivity, external imbalances, terms of trade, fiscal policy or interest rate disparity (Couharde, Delatte, Grekou, Mignon, & Morvillier, 2018; Lee, Milesi-Ferretti, & Ricci, 2013; MacDonald, 1998). These two contradicting assertions by the academic literature is referred to as “exchange rate disconnect puzzle”.

The literature provides several explanations for this puzzle. First, it can be related to the forecast estimation error (see §2.5.2). The studies in which models are estimated with a large panels of data (Engel, Mark, & West, 2008; Ince, 2014; Mark & Sul, 2001), long time series (Lothian & Taylor, 1996) or calibrated (Ca’ Zorzi & Rubaszek, 2020) deliver positive results on exchange rate forecastability. Second, there is ample evidence that the adjustment of exchange rates to equilibrium is non-linear (Curran & Velic, 2019; Taylor & Peel, 2000), which might diminish the out-of-sample performance of macroeconomic models (Kilian & Taylor, 2003; Lopez-Suarez & Rodriguez-Lopez, 2011). Third, few economists argue that the role of macroeconomic fundamentals may be varying over time and this should be accounted for in a forecasting setting (Beckmann & Schussler, 2016; Byrne, Korobilis, & Ribeiro, 2016).

The dominant part of the exchange rate forecasting literature investigates which macroeconomic model performs best out-of-sample. The initial studies explored the role of monetary fundamentals to find that these models deliver inaccurate short-term and not so bad long-term predictions in comparison to the random walk (Mark, 1995; Meese & Rogoff, 1983). In a comprehensive study from mid-2000s, Cheung, Chinn, & Pascual (2005) showed that neither monetary, uncovered interest parity (UIP) nor behavioural equilibrium exchange rate (BEER) model are able to outperform the no-change forecast. A step forward was made by Molodtsova & Papell (2009), who proposed a model combining the UIP and Taylor rule equations and showed that it delivers competitive exchange rate forecasts. This result, however, has not been confirmed by more recent studies (Cheung, Chinn, Pascual, & Zhang, 2019; Engel, Lee, Liu, Liu, & Wu, 2019). In turn, Ca’ Zorzi & Rubaszek (2020) argue that a simple method assuming gradual adjustment of the exchange rate towards the level implied by the Purchasing Power Parity (PPP) performs well over shorter as well as longer horizon. This result is consistent with the results of Ca’ Zorzi et al. (2017) and Eichenbaum, Johannsen, & Rebelo (2017), who showed that exchange rates are predictable within a general equilibrium DSGE framework (see §2.3.15), which encompasses an adjustment of the exchange rate to a PPP equilibrium. Finally, Ca’ Zorzi et al. (2020) discuss how extending the PPP framework for other fundamentals within the BEER framework is not helping in exchange rate forecasting. Overall, at the current juncture it might be claimed that “exchange rate disconnect puzzle” is still puzzling, with some evidence that methods based on PPP and controlling the estimation forecast error can deliver more accurate forecast than the random walk benchmark. A way forward to account for macroeconomic variables in exchange rate forecasting could be to use variable selection methods that allow to control for the estimation error (see §2.5.3).

3.3.9 Financial time series forecasting with range-based volatility models115